How to Talk to your Computer with Python and OpenAI’s Whisper on your personal machine

Speech to text with a neural network running locally

How To Talk to Your Computer With Python and OpenAI’s Whisper on Your Personal Machine

Speech-to-text with a neural network running locally

If you’ve watched the Iron Man movies, you’re well aware of the how helpful Jarvis can be. If not, think of an English Butler trapped in a computer. One critical aspect of Jarvis is his ability to understand what you say. This is known as speech to text and is something I will try and re-create in this post using python and a bit of machine learning.

For a little taste of what is to come, check out this short video:

Video by Author

You may use the below material as a quick, standalone guide for speech-to-text. However, this is the second part of a broader series that uses Python to build your own computer assistant. If you want to learn more, see here for the first part of the series that uses Python to make your computer talk.

What is speech-to-text?

Speech to text (as well as text to speech) are natural language processing problems, which is often shortened to the acronym, NLP. Any NLP problem involves taking the written or spoken word, doing some computation and producing an output or prediction.

Speech recognition software breaks an audio recording into individual sounds, analyses the sounds, and then uses an algorithm to find the most probable words that fit the sounds. The hand-crafted approach to this algorithm is to find the closest match of each sound to those in a large database, or to examples that share characteristics of the recording. However, this process is difficult to implement in code by hand.

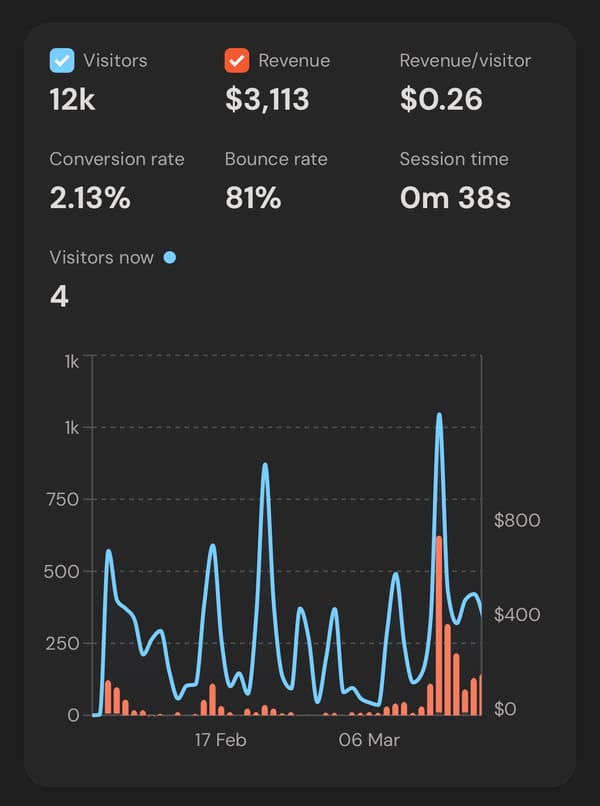

Speech to text has to deal with something known as the “cocktail-party problem”. This is a problem humans solve easily, where we can isolate single voices from that of many. Hence, many new highly-successful speech-to-text applications simulate neurons (the cells in our brains) with neural networks and train them to complete the task with examples (a process called supervised learning). Some notable examples of this are Deep Karaoke and work by Yu, D and others. If you did not know, the “deep” in deep-learning just refers to the use of a very large, or, in other words, deep, neural network (i.e. those that have many layers of neurons).

Because the burden of how to solve the problem is shifted to the neural network, the creator of the algorithm often has a drastically reduced workload. This means that neural networks can solve problems that would be infeasible for humans to hand-craft with traditional methods.

One recent deep-learning-based application that has been getting a lot of attention is Google Duplex. See the video, here. At a developer conference in May 2018, Google unveiled this new and upcoming feature for Google Assistant, that can call people and chat, just like a human assistant. They demoed this application by ringing a salon and restaurant and making a booking for times the user requested. One really striking thing about this application, is how well the neural network could understand poorly structured sentences.

Finding a speech-to-text model

There is a good selection of speech-to-text packages available to Python. What these packages do is either: 1) offload the work to an API that has an intelligent algorithm, most use a deep learning algorithm, or 2) use a separate program (pocketsphinx). A few commonly used ones are:

For a nice tutorial on how to use a popular wrapper around these libraries, SpeechRecognition, see https://realpython.com/python-speech-recognition/.

After talking up deep learning methods, I think it would be nice to use one. I also don’t want to have to pay to use a model that can be run on most laptops for free. We will also skip out on the api wrappers and run this model ourselves locally with the transformers library.

One of the more cutting edge models you can run yourself is OpenAI’s Whisper, which was open sourced and is freely accessible and downloadable from huggingface.

Initial setup

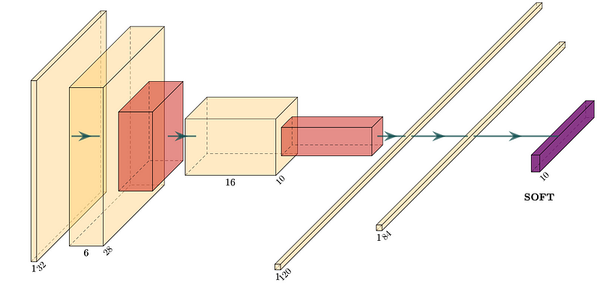

Now, before we get started running everything, let’s set up our project. If you are continuing on from my previous post, then you have already done this step. Feel free to activate your virtual environment and skip this section.

We will be doing everything through a text editor and terminal. If you do not know what that means, then a text editor I recommend for people getting started is vscode and a terminal is usually built into your text editor (like in vscode) or is a program on your computer called “terminal” or “cmd”.

Now, I want you to open your terminal and change directory to where you keep your projects, e.g. with

cd ~/projectsNext, create a directory to store our project. This is completely up to you, but I want my assistant to be called Robert. So, I’m creating a new directory called “robert” and then changing into that directory with

mkdir robert

cd robertYou can change the name to whatever you like, e.g. Brandy or Kumar or something.

Next up, we need to get python up and running. For this, we will need python 3 installed. If you don’t have this installed, see https://www.python.org/ for installation instructions. We will also need to create a python virtual environment. If you want to learn more about this, see here for one of my recent posts.

You can verify your python installation in the terminal with:

python3 --versionYou should now be able to create your python virtual environment inside your robert directory with:

python3 -m venv venvNote, if the version of python you installed is called py ,python, python3.7, python3.9 or anything else, then use that

You should then be able to activate your virtual environment with the following:

(on MacOS and Linux)

source venv/bin/activateor (Windows)

venv\Scripts\activateInstall packages and dependencies

We now need to install our required python packages. To do this, we will create a requirements.txt file or edit our previous file. This requirements.txt file will help us keep track of what packages we are using for our project.

transformers

torchaudio

gradioThen (assuming you are at your terminal in the same directory) install your requirements

pip install -r requirements.txtOne of our packages (gradio) requires ffmpeg to be installed, as it uses it to process audio recordings behind the scenes.

If you have a mac with homebrew, installation is as simple as

brew install ffmpegSimilarly, on linux you can install it with your favourite package manager (e.g. for ubuntu)

sudo apt install ffmpegOn windows, follow instructions at the ffmpeg site: https://ffmpeg.org/

Creating the script

If you‘re thinking “Show me the code already!”, here’s the full script for our file called conversation.py:

import gradio as gr

from transformers import pipeline

import numpy as np

transcriber = pipeline("automatic-speech-recognition", model="openai/whisper-base.en")

def transcribe(stream, new_chunk):

sr, y = new_chunk

y = y.astype(np.float32)

y /= np.max(np.abs(y))

if stream is not None:

stream = np.concatenate([stream, y])

else:

stream = y

return stream, transcriber({"sampling_rate": sr, "raw": stream})["text"]

demo = gr.Interface(

transcribe,

["state", gr.Audio(sources=["microphone"], streaming=True)],

["state", "text"],

live=True,

)

demo.launch()The full conversation.py code

But, if you want to better understand what’s going on, here’s an explanation of what each part does below.

First, we import some required packages

import gradio as gr

from transformers import pipeline

import numpy as npThe gradio library provides an intuitive UI. The transformers library enables us to leverage the Whisper model, and numpy aids in array operations.

Next, define the model and transcribing function.

transcriber = pipeline("automatic-speech-recognition", model="openai/whisper-base.en")

def transcribe(stream, new_chunk):

sr, y = new_chunk

y = y.astype(np.float32)

y /= np.max(np.abs(y))

if stream is not None:

stream = np.concatenate([stream, y])

else:

stream = y

return stream, transcriber({"sampling_rate": sr, "raw": stream})["text"]To use OpenAI’s Whisper, we’ve established a transcription pipeline. This script handles incoming audio chunks, processes them, and transcribes the speech.

Finally, we wrap it all up with a Gradio interface

demo = gr.Interface(

transcribe,

["state", gr.Audio(sources=["microphone"], streaming=True)],

["state", "text"],

live=True,

)and start the Gradio server.

demo.launch()The Gradio interface establishes a live demo that incorporates the continuous transcription feature, allowing real-time interaction with the Whisper model.

Now, run this file

python conversation.pyYou should see an output telling you about the URL to navigate to. Launch the live demo by clicking the URL provided in the output.

I think it’s pretty amazing that you can get such a good speech to text model running on your own machine in real-time.

It’s multilingual too! So, if you speak another language, give that a go.

And that’s it! You now have a Python script capable of real-time speech recognition, ready to serve as the foundation for your very own Jarvis-like assistant.

If you’d like to see me combine this speech-to-text model with text-to-speech and a GPT “brain”, please comment below. Also, let me know if you have any cool ideas we can get our personal AI assistants to do.

Have fun!